|

|

пре 2 година | |

|---|---|---|

| .. | ||

| images | пре 2 година | |

| README.md | пре 2 година | |

| __init__.py | пре 2 година | |

| client.py | пре 2 година | |

| communication.py | пре 2 година | |

| dataset.py | пре 2 година | |

| eval_dataset.py | пре 2 година | |

| knn_monitor.py | пре 2 година | |

| linear_evaluation.py | пре 2 година | |

| main.py | пре 2 година | |

| model.py | пре 2 година | |

| semi_supervised_evaluation.py | пре 2 година | |

| server.py | пре 2 година | |

| transform.py | пре 2 година | |

| utils.py | пре 2 година | |

README.md

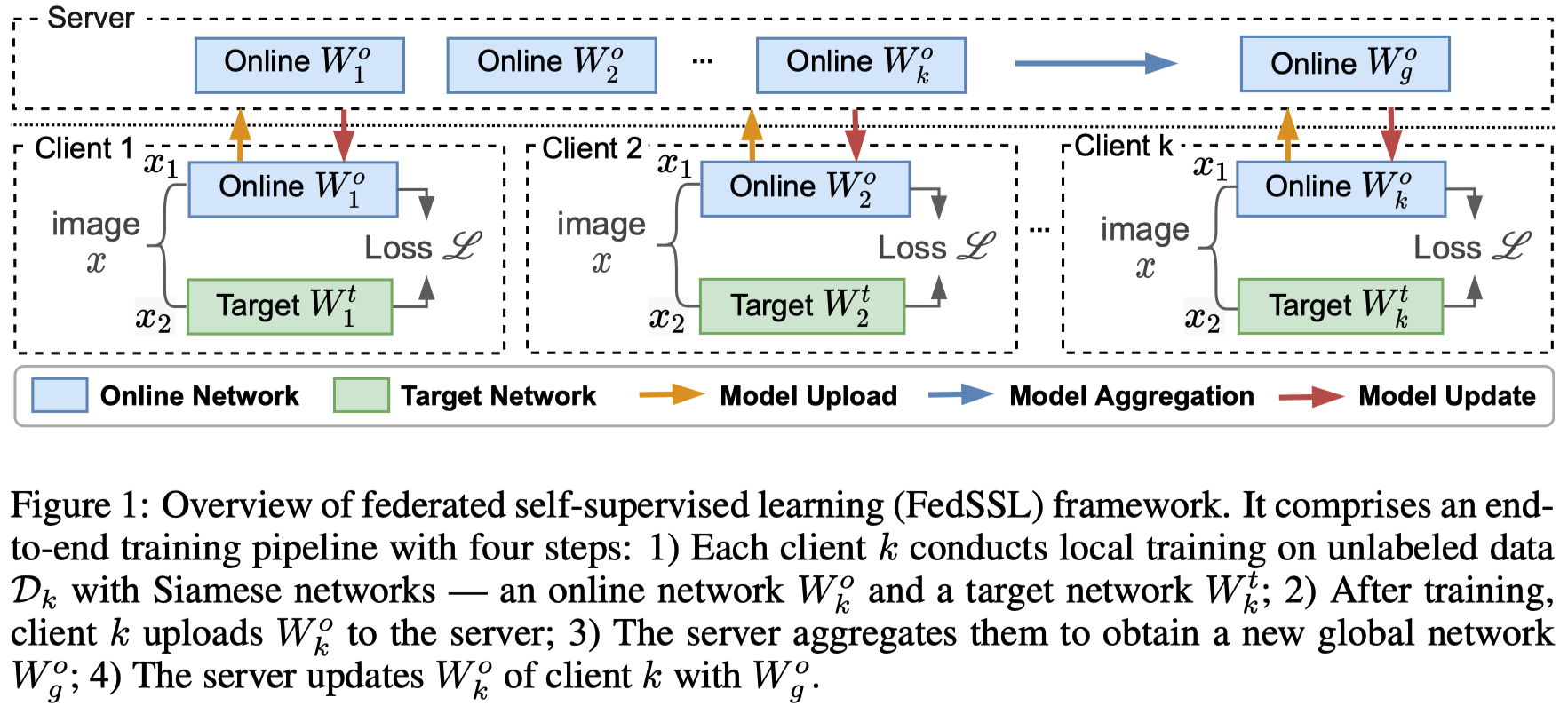

Federated Self-supervised Learning (FedSSL)

Also name as Federated Unsupervised Representation Learning (FedU)

A common limitation of existing federated learning (FL) methods is that they heavily rely on data labels on decentralized clients. We propose federated self-supervised learning framework (FedSSL) to learn visual representations from decentralized data without labels.

This repository is the code for two papers:

- Divergence-aware Federated Self-Supervised Learning, ICLR'2022. [paper]

- Collaborative Unsupervised Visual Representation Learning From Decentralized Data, ICCV'2021. [paper]

The framework implements four self-supervised learning (SSL) methods based on Siamese networks in the federated manner:

- BYOL

- SimSiam

- MoCo (MoCoV1 & MoCoV2)

- SimCLR

Training

You can conduct training using different FedSSL methods and our proposed FedEMA method.

You need to save the global model for further evaluation.

FedEMA

Run FedEMA with auto scaler $\tau=0.7$

python applications/fedssl/main.py --task_id fedema --model byol \

--aggregate_encoder online --update_encoder dynamic_ema_online --update_predictor dynamic_dapu \

--auto_scaler y --auto_scaler_target 0.7 2>&1 | tee log/${task_id}.log

Run FedEMA with constant weight scaler $\lambda=1$:

python applications/fedssl/main.py --task_id fedema --model byol \

--aggregate_encoder online --update_encoder dynamic_ema_online --update_predictor dynamic_dapu \

--weight_scaler 1 2>&1 | tee log/${task_id}.log

Other SSL methods

Run other FedSSL methods:

python applications/fedssl/main.py --task_id fedbyol --model byol \

--aggregate_encoder online --update_encoder online --update_predictor global

Replace byol in --model byol with other ssl methods, including simclr, simsiam, moco, moco_v2

Evaluation

You can evaluate the saved model with either linear evaluation and semi-supervised evaluation.

Linear Evaluation

python applications/fedssl/linear_evaluation.py --dataset cifar10 \

--model byol --encoder_network resnet18 \

--model_path <path to the saved model with postfix '.pth'> \

2>&1 | tee log/linear_evaluation.log

Semi-supervised Evaluation

python applications/fedssl/semi_supervised_evaluation.py --dataset cifar10 \

--model byol --encoder_network resnet18 \

--model_path <path to the saved model with postfix '.pth'> \

--label_ratio 0.1 --use_MLP

2>&1 | tee log/semi_supervised_evaluation.log

File Structure

├── client.py <client implementation of federated learning>

├── communication.py <constants for model update>

├── dataset.py <dataset for semi-supervised learning>

├── eval_dataset <dataset preprocessing for evaluation>

├── knn_monitor.py <kNN monitoring>

├── main.py <file for start running>

├── model.py <ssl models>

├── resnet.py <network architectures used>

├── server.py <server implementation of federated learning>

├── transform.py <image transformations>

├── linear_evaluation.py <linear evaluation of models after training>

├── semi_supervised_evaluation.py <semi-supervised evaluation of models after training>

├── transform.py <image transformations>

└── utils.py

Citation

If you use these codes in your research, please cite these projects.

@inproceedings{zhuang2022fedema,

title={Divergence-aware Federated Self-Supervised Learning},

author={Weiming Zhuang and Yonggang Wen and Shuai Zhang},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=oVE1z8NlNe}

}

@inproceedings{zhuang2021fedu,

title={Collaborative Unsupervised Visual Representation Learning from Decentralized Data},

author={Zhuang, Weiming and Gan, Xin and Wen, Yonggang and Zhang, Shuai and Yi, Shuai},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={4912--4921},

year={2021}

}

@article{zhuang2022easyfl,

title={Easyfl: A low-code federated learning platform for dummies},

author={Zhuang, Weiming and Gan, Xin and Wen, Yonggang and Zhang, Shuai},

journal={IEEE Internet of Things Journal},

year={2022},

publisher={IEEE}

}